WooCommerce Hosting Performance Benchmarks 2020

Please read the Methodology to fully understand the scope of these tests.

Note: Please check company profiles for summary of performances across multiple tiers. Some companies also offer promotions or coupon codes for a discount as well.

WooCommerce Hosting Performance Benchmarks is spawned off WordPress Hosting Performance Benchmarks and is designed to create a consistent set of benchmarks showing how WooCommerce specialized web hosting companies perform. The focus of these tests is performance, not support, not features, not any other dimension. These benchmarks should be looked at in combination with other sources of information when making any hosting decision. Review Signal’s web hosting reviews has insights for some of the companies with regards to aspects beyond performance. That said, for the performance conscious, these benchmarks should be a good guide.

The original post can be viewed here.

The major differences from the WordPress methodology are the following:

Setup

All tests were performed on an identical WooCommerce dummy website with the same plugins except in cases where hosts added extra plugins or code. The Storefront theme was used with the following sample products. The following Plugins were installed: Jetpack, WooCommerce, WooCommerce Admin, WooCommerce Services, WooCommerce Stripe Gateway, and WP Performance Tester.

WooCommerce Specific Settings were a US address, $USD, digital products, Stripe payment gateway, Storefront theme, automated taxes with Jetpack installed.

Load Storm

The process for LoadStorm was the unique WooCommerce specific test. There were four different profiles created and given their own user distributions (in % after profile), all with 5-10 second page think time. The test scaled from 10 to 1000 concurrent users over 40 minutes and stayed at 1,000 concurrent users for 20 minutes (60 minute test, 20 minute peak).

Profile 1 (20%): Buyer – Homepage, add item to cart, go to cart, checkout (doesn’t submit order)

Profile 2 (10%): Customer (existing) – Homepage, login, view orders, view account details

Profile 3 (20%): Browser – Homepage, second page, product, related product, homepage, product, related product

Profile 4 (50%): Home – Homepage only, called Visitor profile last year

Load Impact

Followed the $51-100/Month Price Tier with load going from 1-2000 users over 15 minutes.

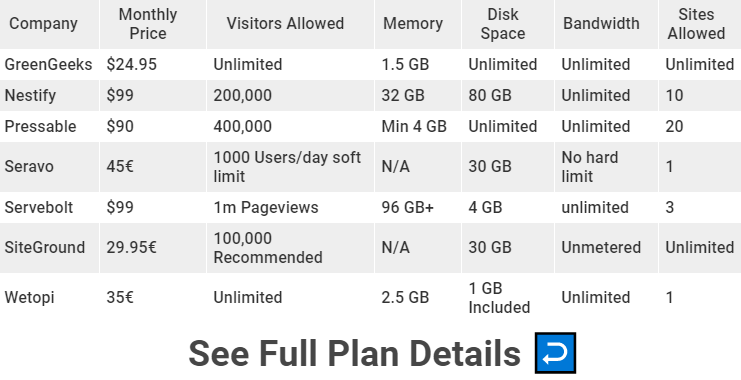

Companies and Products

LoadStorm Testing Results

Load Storm is designed to simulate real users visiting the site, logging in and browsing. It tests uncached performance.

Results Table

| Company | Total Requests | Total Errors | Peak Rps | Average Rps | Peak Response Time | Average Response Time | Total Data Transferred | Peak Throughput | Average Throughput | Woo Buyer Profile | Woo Customer Profile | Woo Browser Profile | Woo Home Profile | Wp-login Average Response Time |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GreenGeeks | 1985092 | 258 | 788.48 | 551.41 | 15106 | 183 | 50.34 | 19.92 | 13.98 | 698 | 908 | 463 | 470 | |

| Nestify | 4018521 | 3 | 1544.18 | 1116.26 | 15075 | 53 | 99.46 | 37.98 | 27.63 | 485 | 446 | 463 | 266 | |

| Pressable | 4773225 | 2 | 1847.27 | 1325.90 | 15049 | 44 | 119.45 | 46.17 | 33.18 | 504 | 511 | 102 | 89 | |

| Seravo | 1702737 | 343818 | 576.37 | 472.98 | 15013 | 283 | 36.56 | 11.75 | 10.15 | 707 | 674 | 720 | 661 | |

| Servebolt | 5125262 | 218 | 2016.50 | 1423.68 | 15106 | 50 | 118.16 | 46.58 | 32.82 | 383 | 401 | 398 | 88 | |

| SiteGround | 2350950 | 6 | 914.48 | 653.04 | 10028 | 131 | 58.49 | 22.87 | 16.25 | 276 | 343 | 156 | 120 | |

| Wetopi | 1985848 | 120 | 729.80 | 551.62 | 10362 | 188 | 46 | 16.91 | 12.78 | 977 | 845 | 619 | 523 |

Discussion

GreenGeeks, Nestify, Pressable, Servebolt, SiteGround and Wetopi handled Load Storm without issue.

Seravo had similar issue in their other load tests in the WordPress Hosting Performance Benchmarks in the $101-200 tier which was a docker proxy bug that couldn't be resolved during the tests.

I decided to take a deeper look at the results and analyze the performance of loading HTML mime types, to ignore a lot of static assets which are cached and bring down average response times.

I broke it down by profile because each script is different in terms of which endpoints on WooCommerce they are hitting.

Load Storm Average Response Time by Profile

This takes deeper look at the results and analyzes the performance of loading HTML mime types, to ignore a lot of static assets which are cached and bring down average response times. This tests how fast the initial pages are delivered, which is what a user would experience before loading all the other assets like css, javascript and images.

Results Table

| Company | Buyer Profile | Customer Profile | Browser Profile | Home Profile |

|---|---|---|---|---|

| GreenGeeks | 698 | 908 | 463 | 470 |

| Nestify | 485 | 446 | 463 | 266 |

| Pressable | 504 | 511 | 102 | 89 |

| Seravo | 707 | 674 | 720 | 661 |

| Servebolt | 383 | 401 | 398 | 88 |

| SiteGround | 276 | 343 | 156 | 120 |

| Wetopi | 977 | 845 | 619 | 523 |

*Seravo included but high error rate makes this number not mean a whole lot.

What we can see from the results is that the homepage only (Visitor profile) is universally the fastest page for every company. That would be expected since the page should be cached and it's only hitting that single endpoint.

The second fastest profile in general seems to be the be the product viewer (Browser profile) who simply looks at different products on the site.

The Buyer and Existing Customer profiles appear to be the heaviest - although it's unclear one is obviously more stressful for WooCommerce based on the results. Both are having to deal with data being sent to the server and handled in a unique manner for each request.

It is reassuring to see that the generally expected outcome matches the reality. It's also nice to see the average response time under 1000ms for even the heavier pages.

K6 Static Testing Results

K6 Static test is designed to test cached performance by repeatedly requesting the homepage.

Results Table

| Company | Requests | Errors | Peak Rps | Average Response Time | Average Rps | P95 | P99 |

|---|---|---|---|---|---|---|---|

| GreenGeeks | 626519 | 0 | 556 | ||||

| Nestify | 728945 | 0 | 697 | ||||

| Pressable | 899263 | 0 | 208 | ||||

| Seravo | 632203 | 0 | 565 | ||||

| Servebolt | 843550 | 12 | 161 | ||||

| SiteGround | 808259 | 0 | 220 | ||||

| Wetopi | 487848 | 551 | 1920 |

Discussion

Average Response Time was actually Peak Average Response time in Load Impact.

Green Geeks, Nestify, Pressable, Seravo Servebolt and SiteGround all handled this test without issue.

Wetopi struggled a some and peak average load time was up to 1920ms.

Uptime Testing Results

Uptime is monitored by two companies: HetrixTools and Uptime Robot. A self hosted monitor was also run in case there was a major discrepancy between the two third party monitors.

Results Table

| Company | Uptime Robot | Hetrix | Alt Uptime Monitor |

|---|---|---|---|

| GreenGeeks | 99.911 | 99.99 | 100 |

| Nestify | 100 | 100 | 100 |

| Pressable | 100 | 100 | 100 |

| Seravo | 99.997 | 100 | 100 |

| Servebolt | 99.583 | 99.99 | 99.99 |

| SiteGround | 99.996 | 99.99 | |

| Wetopi | 99.993 | 99.98 | 100 |

Discussion

Servebolt was the fastest in three locations but had the lowest average response time around the world doing consistently well amongst its peers. Wetopi was the fastest in 4 locations and slowest in four locations, an interesting pattern. Nestify was the slowest in 7/12 locations which is a little disappointing but then it ends up being the fastest in Frankfurt and second fastest in Dulles.

WebPageTest Testing Results

WebPageTest fully loads the homepage and records how long it takes from 12 different locations around the world. Result are measured in seconds.

Results Table

| Company | California | London | Frankfurt | Singapore | Mumbai | Sydney | Average | Virginia | Tokyo | Chicago | Israel | Rose Hill Mauritius |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GreenGeeks | 1.325 | 1.158 | 1.23 | 2.634 | 2.519 | 2.681 | 1.715166667 | 0.893 | 1.955 | 1.149 | 1.715 | 3.323 |

| Nestify | 2 | 2.686 | 0.661 | 3.9 | 4.229 | 3.49 | 2.501416667 | 0.703 | 2.974 | 1.281 | 2.521 | 5.572 |

| Pressable | 1.652 | 1.118 | 1.251 | 1.682 | 1.798 | 1.534 | 1.43475 | 0.786 | 1.528 | 1.111 | 2.071 | 2.686 |

| Seravo | 1.37 | 1.145 | 1.282 | 2.705 | 2.65 | 2.596 | 1.750833333 | 0.708 | 2.106 | 1.146 | 1.791 | 3.511 |

| Servebolt | 1.789 | 1.03 | 0.888 | 1.763 | 2.346 | 1.278 | 1.346083333 | 0.657 | 1.085 | 1.264 | 1.832 | 2.221 |

| SiteGround | 1.486 | 1.34 | 1.429 | 2.551 | 3.138 | 2.685 | 1.87325 | 0.738 | 1.612 | 1.048 | 1.994 | 4.458 |

| Wetopi | 1.707 | 0.594 | 2.103 | 4.315 | 1.447 | 3.445 | 1.854583333 | 0.948 | 2.935 | 1.517 | 1.158 | 2.086 |

Discussion

Every company was above the 99.9% threshold I would expect from any company. The only issue was a strange discrepancy between UptimeRobot and StatusCake on Servebolt's uptime. One showed a very low 99.583 and the other 99.99. The third monitor I setup showed 99.99%, so the issue appears to be with Uptime Robot.

WPPerformanceTester Testing Results

WPPerformanceTester performs two benchmarks. One is a WordPress (WP Bench) and the other is a PHP Bench. WP Bench measures how many WP queries per second and higher tends to be better (varies considerably by architecture). PHP Bench performs a lot of computational and some database operations which are measured in seconds to complete. Lower PHP Bench is better.

Results Table

| Company | PHP Bench | WP Bench |

|---|---|---|

| GreenGeeks | 9.92 | 1402.524544 |

| Nestify | 7.483 | 809.7165992 |

| Pressable | 6.369 | 1317.523057 |

| Seravo | 8.094 | 400.8016032 |

| Servebolt | 3.271 | 2008.032129 |

| SiteGround | 9.493 | 425.8943782 |

| Wetopi | 11.343 | 576.3688761 |

Discussion

Servebolt was the obvious standout here. They had the highest queries per second and lowest PHP Bench time.

SSL Testing Results

The tool is available at https://www.ssllabs.com/ssltest/

Results Table

| Company | Qualsys SSL Grade |

|---|---|

| GreenGeeks | A+ |

| Nestify | B |

| Pressable | A |

| Seravo | B |

| Servebolt | B |

| SiteGround | A |

| Wetopi | B |

Discussion

Green Geeks was the only company to receive an A+ rating.

Conclusion

There are two levels of recognition awarded to companies that participate in the tests. There is no ‘best’ declared, it’s simply tiered, it’s hard to come up with an objective ranking system because of the complex nature of hosting. These tests also don’t take into account outside factors such as reviews, support, and features. It is simply testing performance as described in the methodology.

Top Tier

This year's Top Tier WordPress Hosting Performance Award goes to the following companies who showed virtually no signs of struggle during the testing.

Honorable Mention

The following companies earned Honorable Mention status because they did very well and had a minor issue or two holding them back from earning Top Tier status.

No company achieved this status.